Using Finite Element Analysis to Predict Attenuation

This project explores how excess fiber length (EFL) and jacket diameter affect stress and signal loss in loose-tube optical fiber cables. Using SolidWorks and experimental data, I built and validated a simulation tool that predicts attenuation based on temperature-driven shrinkage and material interactions.

Simulation-Informed Design

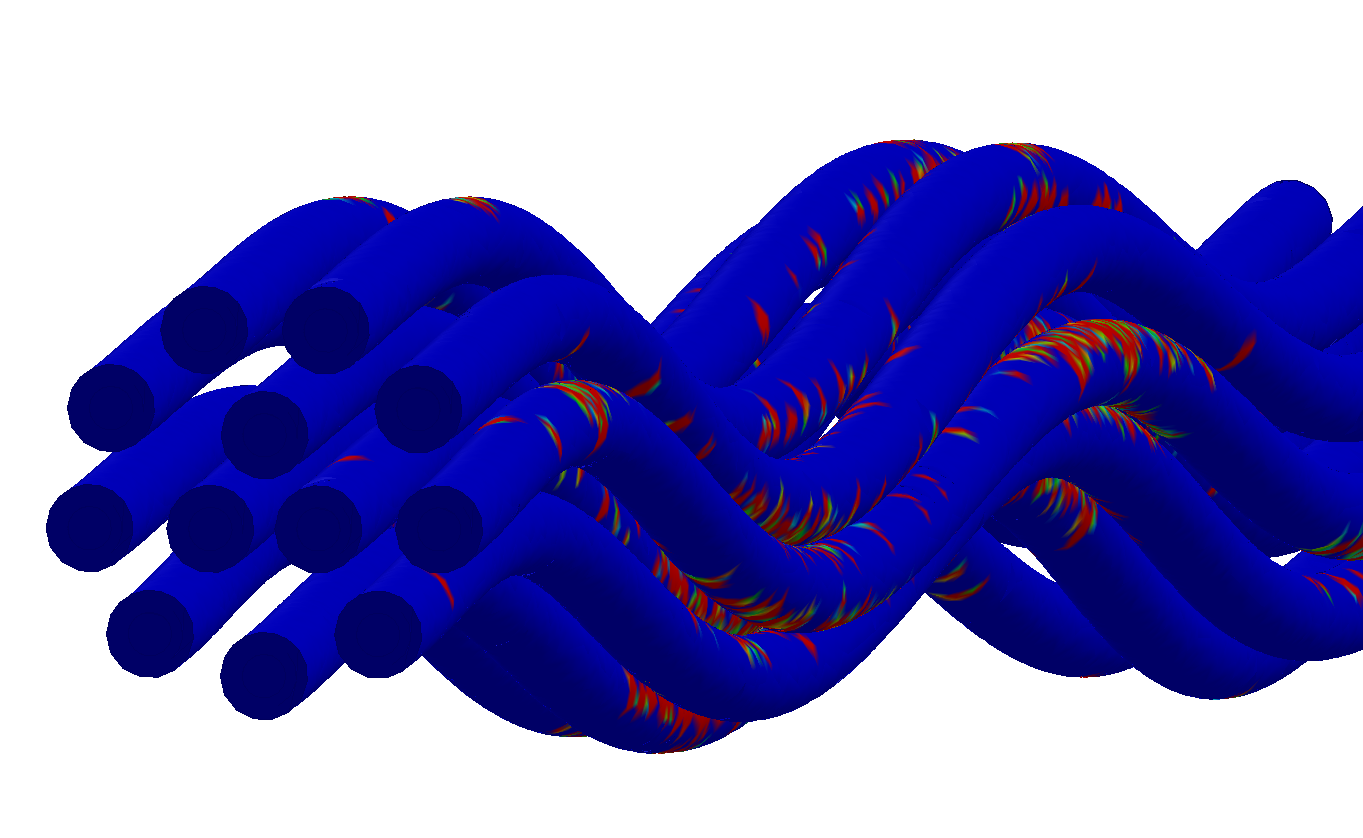

I developed a SolidWorks-based simulation to model how thermal shrinkage and internal fiber geometry influence optical attenuation. By capturing real-world contact between fibers and the cable jacket, the tool allows rapid iteration and optimization of cable designs.

Simulated displacement and contact pressure under jacket shrinkage.

Results

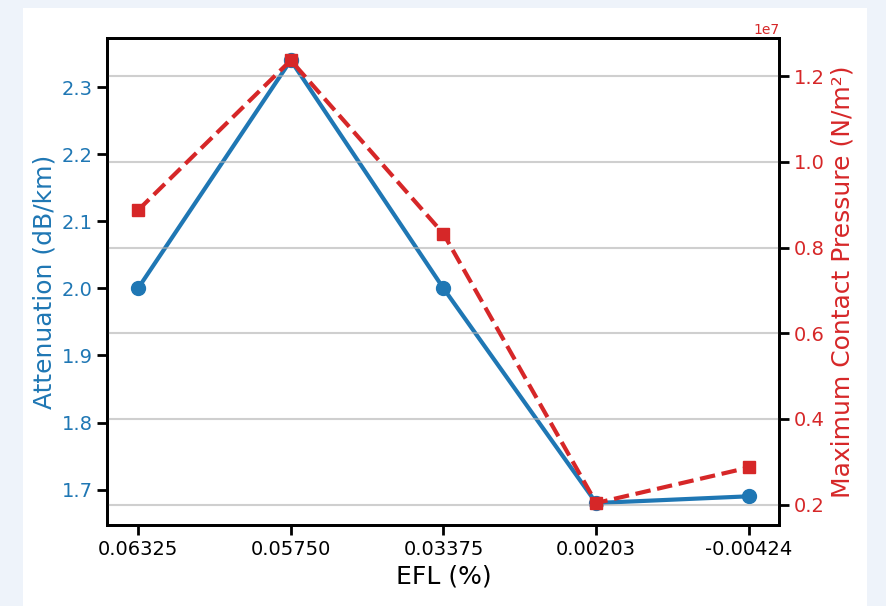

The model showed strong correlation with measured attenuation data from manufactured samples. Simulations accurately predicted how both excess fiber length (EFL) and inner-diameter variation affected stress distribution and optical performance.

Impact of EFL and inner diameter on simulated stress and measured attenuation.

Compression Simulation

To ensure consistent mechanical response between different cable sizes, I modeled jacket compression between steel plates. Matching the simulated force-displacement behavior to real tests helped determine the proper wall thickness for smaller designs.

Complex Modeling & Next Steps

To refine realism, I also developed a more detailed multi-material model including glass core/cladding, a softer inner acrylate layer, a harder outer acrylate shell, and aramid yarn. While computationally heavier, with a little more development, this version may provide deeper insight into stress transfer between materials, paving the way for more accurate prediction tools.